Hadoop is a map reduce framework that allows for parallel distributed computing. The criteria for adopting Hadoop is that, the same operations are performed on records and records are not modified once created. So Reads are more frequent than write in a traditional relational database system. The advantage of Hadoop or a Map Reduce frame work comes into action when the data is large enough ~ 1TB. Above this point data is referred to as big data approaching PetaB. At such a point it is better to throw multiple machines at the task rather than to use a single machine with parallel programming. The technique used is Map Reduce which deals with Key and value pairs.

A map operation is performed on records whose inputs and outputs are key value pairs themselves. The output is merged, sorted and submitted to a reduce operation which combines and generates the output. For example, we have 12 years of student records for examinations across 15 subjects. The total number of records is 5,700,000. On this data set we can find operations such as highest score on a subject over the years, total failure reported across the year etc. To find the total failures, the map operation outputs a key value pair every time it encounters a failed exam. The reduce job takes all such key value pairs and emits a Key value pair .

The hadoop standalone configuration on these records finished in ~ 35-40 seconds. The hadoop run start is shown here

The run end is here

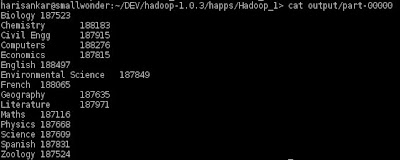

Output is here.

The same on a pseudo clustered mode gives results for similar operations in ~40 seconds. The difference is that, the input files have to copied into the Hadoop distributed file system and the output needs to be copied/read from the HDFS. As shown here

No comments:

Post a Comment