Embedding Pig in Python - Hatari!

---------------------------------------------

Pig Latin does not allow control structures like Java and other high-level languages. So inorder to get more control over the execution context, loop through a set of parameters etc we can embedd Pig in Python. This is supported only for Python 2.7 and not above. At first this may seem not so useful. But, if you have a single script, i.e a parameterised pig script like the one in the previous post, you can attach parameters to the script from Python. Plus since Python can also be used to write User Defined Functions, all the code Pig, Python, UDFs reside in the same project. This is usefull when things get more complex. In the previous post note that, I used a java project to create a java UDF which was used in Pig. So I had 2 deployments a pig script and a Java jar. But, if we embedd pig in python, we have only one deployment, the python code.

---------------------------------------------

Pig Latin does not allow control structures like Java and other high-level languages. So inorder to get more control over the execution context, loop through a set of parameters etc we can embedd Pig in Python. This is supported only for Python 2.7 and not above. At first this may seem not so useful. But, if you have a single script, i.e a parameterised pig script like the one in the previous post, you can attach parameters to the script from Python. Plus since Python can also be used to write User Defined Functions, all the code Pig, Python, UDFs reside in the same project. This is usefull when things get more complex. In the previous post note that, I used a java project to create a java UDF which was used in Pig. So I had 2 deployments a pig script and a Java jar. But, if we embedd pig in python, we have only one deployment, the python code.

1) Write pig script in a file.

2) Use compileFromFile() function to load and compile your script.

3) Bind using your list of map of parameters. yes a list of map of parameters.

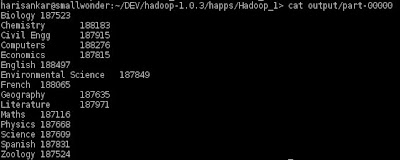

4) Run the script using runSingle()

5) Get status on how things went using runSingle(). You can also use isSuccessFul().

Better yet Do this again for all your scripts. You can even read parameters from a file, add threading where each thread will execute a script with one set of parameters.

Ref: More details can be found in the presentation at http://www.slideshare.net/julienledem/presentation-pig-scripting by Julien Le Dem.

Ref: Good Read Chapter 9 on Book, Programming Pig by Alan F Gates