Here we look at implementation of a web search engine. The project already has the data on word counts for web pages. Pages added to the project have been crawled and content word counts are stored periodically. This was primarily for generating word clouds and text content analysis. However the word counts can also be used build a search index for the set of web pages. Given a bunch of words the search index can give back the list of pages within which the words occur. In addition to that, the word counts are attached with the timestamp at which the page was processed. This helps to find more recent occurrences.

Quick overview of steps involved:

A) Filter word counts within a time period. Past 4 (or N) days.

B) Build a trie data structure with the data.

C) Compress the trie so that it can be held in memory.

D) For a given search string made up of multiple words, find the set of web pages where the words occur. The compressed trie helps with this. Time complexity is described below.

E) Find the intersection of the sets of web pages.

F) Extract required information and send back results. This information includes as in other search engines the full url of the page, time of crawling (word count generation) and a title.

G) Cache information as necessary to speed up the web view.

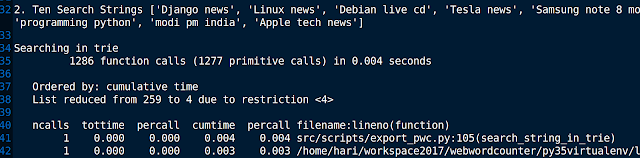

Runtimes with cProfile are as follows:

1) Building the trie takes 3.583 seconds for 173693 Words. Pickled Size is 119.2MB

3) Searching including fetching resulting web pages ~4ms

4) Searching all 10 strings above including fetching results ~116ms

Some screenshots of the engine at work are shown

Apart from the trie index the rest of the data is already part of the project database. However the trie is not part of the database. It is generated when required, compressed and held in memory.

Quick overview of tries: At the core of the index is a data structure called Trie (compressed). A trie is an m-ary tree where each node branches out based on the character encountered in a key. The interesting thing about tries is this. For a set of K unique characters a node has K+1 pointers. Based on the keys that are inserted into a trie the number of nodes can change. For a given trie, if S is node count, key count is N and L is the length of longest key then the search for any key is in O(L) independent of K and N. Storage requirement is (K+1) x S x P bits independent of N, the number of keys in the trie. P is number of bits in a pointer.

Compressing the trie: Once the trie has been constructed it can be compressed. Multiple techniques such as Patricia Tries and de la Briandais trees can be used. However, here the project uses a different technique. Any trie with N nodes and a K character set can be represented by an M x K table. The table can be shrinked further using a sparse matrix. Here we see the difference in serialised size of the trie index.

For 79 web pages in the project, with in a week there can be minimum 2 crawls so ~ 160 word count data rows for the web pages. Sizes for objects were also monitored using pympler trackers for Python 3.

Uncompressed Trie size 25628388 bytes ~ 25MB

Compressed Trie size 21586721bytes ~ 21 MB

Compressed Trie with minimum selected data in leaf nodes 7281173 bytes ~ 7.2 MB

This 7.2 MB trie index can be held in memory or cached.

The search results are in decreasing order of timestamps.

The architecture of the crawler project was discussed previously here. Crawling and word counts are executed in celery async tasks. This architecture is shown below.

Future work:

1) Currently a set intersection of the words is used. More options like OR and NOT can be supported using expression trees.

2) Storing the pages themselves in the filesystem for reference would be great. But this is not feasible at the present disk allowance.

3) Since the word counts are time stamped, a date time search window option can be given to users. Holding the index over an increased period of time raises the size of the index too.

4) It would be feasible to rank the pages on a complex parameter than just time stamps. Relevant visits and count can be used along with timestamps.

5) Word edit distance can be used to correct words as in popular search engines.