In this post we look at how the throughput of my data visualization web application was improved to 267 requests per second in this iteration. This is a significant improvement with additional caching and application architecture modifications. Note that this new throughput is for the web application with a lot more functionalities like language support, machine learning models etc.

Background

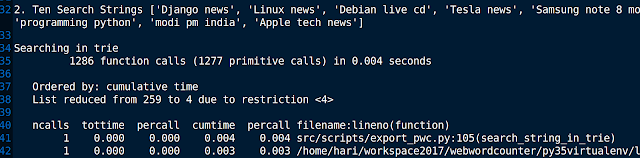

Previous JMeter test results with load balanced deployment and model caching is here. The same load test is used again to see the improvement.

The load balanced architecture is described here.

JMeter test results with new throughput

JMeter Response time Graph is shown below.

JMeter Graph results is shown below.

Techniques so far and modifications applied

The previous caching technique focused on avoiding database hits.

That employed a Django model based custom caching library. In addition to that, it also marked static files like js and images with down stream cache-control so that the browser does not download them each time.

That far that is good. However

1) With the application there are a couple of scopes from improvement especially since a set of new features have been added among which language support is prominent. The Django application builds HTML templates to provision to clients. This includes all the html templates like navigation bar, user profile templates and page footer templates. Some template contents have to change based on say time, user, user language etc. However most templates once they have been generated based on one or more of the above can remain same and be reused. That's where template fragment caching comes in. A few things that can trip if not understood are

- The gain from a single template fragment being cached is tiny. The return on investing in caching templates will only show as the number of concurrent requests on the application go up.

- Also, locality of cache matters for template fragments. The savings to be made on time is small on each request. So even having to go to a cache on a different host will cost more than just building the template!. This mandates a local cache and is a modification to the architecture.

2) Each view generates a response based on the request. For most requests the response is the same. For example, the response to a request for getting 'Word counts for www.cnn.com at 3 PM on 25 Dec 2018' is going to be the same. Such views need to be identified and cached. This helps with improving throughput.

3) Finally, one physical aspect of your deployment that can affect performance is thermal throttling of CPUs. It is a good idea to check this too.