In this post we see how planning software code with concurrency will save time.

At the basic level Software = Data structures + Algorithms.

At an advanced level Faster Software = Data structures + Algorithms + Concurrency.

So, we can get more work done in the background or concurrently using multiple threads. But, more threads does not necessarily mean faster software. This is because as more thread are introduced we may be synchronizing the threads using single / multiple thread synchronization mechanisms such as locks. So multiple threads may be waiting on the same lock to do an operation on a data structure. This essentially makes the threads run sequentially. This is the case when ordinary data structures are wrapped using a single lock for thread synchronization in software programming. Not only will this make the data structure become a bottle neck in the performance but also, will introduce synchronization issues with composite operations.

Most collections in Java concurrent package are thread safe and even employ a method known as lock striping. Lock striping stands for splitting a high contension lock into multiple locks with out adversely affecting the integrity of the protected data.

An Example for Concurrency using Lock Striping

Problem: A hash table is used by multiple threads to insert and delete items from the hash table. This hash table has the option of lock striping by taking in the number of locks to protect the underlying data intergrity.

Case 1: Using only a single lock to protect the data structure operations. The time to execute 10000 inserts and 10000 deletes from two threads simultaneously takes runt time 7343811 nanotime for the system.

Case2: Using only 10 lock to protect the data structure operations. i.e every N / 10 of the hashtable entries is protected by one of the 10 locks. The run time for this is 3067498 nanotime for the system. A savings of half the run time when there is only one lock.

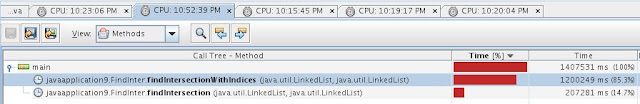

The run times for the two cases in Netbeans profiler is shown below. Case 1

2

Lock striping demo shows that, striping can help the threads to wait on locks protecting narrow segments of the data. But, identifying such scenarios, updating and maintaining it can be challenging. Java uses the same technique in concurrency package. (All coded in Java).